The 7 Best All In One Personal Computers

This wiki has been updated 39 times since it was first published in March of 2015. In the past, every high-performance computer consisted of a large, rectangular box lined with fans and brackets. Today, some of the most cutting-edge components can fit neatly in low-profile cases that are barely noticeable behind their accompanying HD monitors. These all-in-one PCs will save you space while bringing you incredible functionality, crisp video, and high-octane gameplay. When users buy our independently chosen editorial recommendations, we may earn commissions to help fund the Wiki.

Editor's Notes

March 30, 2021:

Generally speaking, all-in-one PCs are considerably less powerful than their full-size desktop counterparts, due largely to space and heat concerns. That hasn't stopped manufacturers from introducing models like the HP Envy, which sports an RTX 2070 GPU and is great for Full HD gaming. Alternatively, the Dell Inspiron 7700 doesn't have a discrete graphics card, but its Intel Xe integrated GPU is actually capable of decent performance in many games.

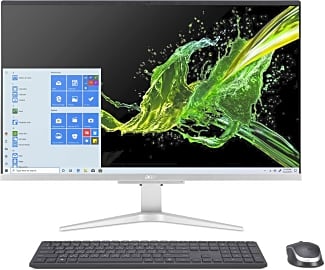

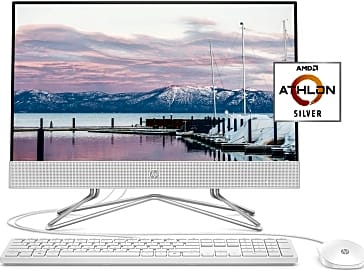

If gaming isn't important to you, the Lenovo IdeaCentre AIO 3 offers the best balance of price and processing power, and the Dell Inspiron 24 Touch is close behind it. The Acer Aspire and HP 22-Inch are both excellent choices if you don't need extra-snappy hardware, although their functionality is pretty limited outside of everyday tasks. Finally, if form is as important to you as function, the Apple iMac is tough to beat, although, like most Apple products, it does require a significant investment.

January 27, 2020:

While this category has a pretty reliable yearly turnover, we found ourselves including only the slightest upgrades to the Apple products that fit the bill. The Apple iMac Pro, in particular, is due for an upgrade, but we replaced the 8-core model with the 10-core offering, so if the company does drop a new model after you purchase this one, it'll still last a bit longer before its specs become obsolete.

Elsewhere, we saw upgrades from all the major players, with the Dell OptiPlex 27 7770 proving to be a somewhat surprisingly capable offering. It boasts more RAM than much of the competition, and offers 8TB of storage on a landscape of models that seem to assume that the streaming revolution has rendered storage space unnecessary. Video and photo editors know differently, however, as do graphic designers, all of whom would likely benefit from this model's speed and reliability if they aren't already betrothed to Apple.

Evolution of the Personal Computer

The slowest computer of the modern era is far ahead of computers created just 10 years ago.

The first computer was designed in 1939 by American physicist, John Atanasoff. Though it would be another 32 years before the personal computer would make its debut in 1971. The invention of integrated circuits brought the computer to new heights. Large numbers of miniature transistors were packed on thin silicone chips to create integrated circuit chips. These chips made computers faster, smaller, more powerful, and less expensive: truly a revolution for the computer age.

These powerful computers would enable scientists to create the next generation of personal computers. It was not long before the microprocessor was developed, which effectively brought the computer to the common man's house. The first computers were ready for home assembly by the mid 1970s.

Noticing a gap in service, some companies took it upon themselves to assemble computers for their clients, which boosted popularity and made the computer more user-friendly. The need for humans to socialize, coupled with the ability of computers to create networks, would lead to the invention of the internet in the early 1990s.

Since the 1990s, computers have become smaller, more efficient, and much more powerful. The slowest computer of the modern era is far ahead of computers created just 10 years ago. This rapid evolution has helped to shape our entire world around the technologies in use today.

The Rise Of Touch Screen Technology

Touch screen devices may seem to be an invention of the modern era, but the first one was actually created in 1965. Since its inception, the touch screen has taken over the technology sector due to its tangibility and ease of use.

Their use is currently limited to touchpads in places like restaurants and grocery stores.

The first displays were called capacitive touch screens. They use an insulator and an electronic impulse to decide when the screen is being touched. As the human body makes its own electricity, the finger creates a great electronic impulse. The first capacitive displays were very basic, understanding only one touch at a time, and they were unable to compute the amount of pressure that was being used.

Resistive touch screens debuted next. Created in the 1970's, resistive touch screens did not rely on electrical currents. The basic resistive touch screen was composed of a conductive sheet lying on top of the screen which contained the sensors to determine touch. While it doesn't seem to be a major advancement in touch screen technology, it removed the necessity to use the finger to control the screen. This meant that any number of objects could be used to input data. Though this was revolutionary in computing at first, resistive touch screens are not used on personal computers at all any more. Their use is currently limited to touchpads in places like restaurants and grocery stores.

It wasn't until the 1980s that touch screen technologies made advancements towards what we now know in tablets and personal computers. As large companies scrambled to create the next big touch screen technologies, a relatively unknown player stepped forward to bring the world multi-touch technology, which paved the way for the touch screen computers and tablets used today.

Is Holographic Technology Next For Personal Computers?

The notion of a hologram usually brings to mind scenes from virtually any science fiction series. After experiencing 3D theaters, and the 4D films seen at many amusement parks, holographic technology seems to be the only avenue yet to be mastered.

These reflections can seem to make objects appear out of thin air or turn one object on stage into another with a simple flash of light masking the exchange.

Contrary to popular belief, most of the holograms experienced today are not actual holograms: they are projections using an antiquated illusion known as Pepper's ghost. The Pepper's ghost illusion was created by scientific demonstrator, John H. Pepper in the 1860s, and is still in use today. The illusion in its most basic form involves two rooms: the stage and the hidden room. Viewers sit facing the stage, while an angled mirror projects images from behind the stage, creating an illusion in front of the audience.

These reflections can seem to make objects appear out of thin air or turn one object on stage into another with a simple flash of light masking the exchange. It was as effective in the 19th century as it is today. Though the basic principle remains the same, modern Pepper's ghost illusions are much more involved, often using digital effects and projections to create what the eye calls a hologram.

True hologram technology on your desktop is still far off. The development of the laser in the 1960s gave birth to the hologram as it is known today, and since then it has found its way into many aspects of daily life. One example is the use of security holograms to help determine a credit card's authenticity. Lifelike holograms, like the ones seen in sci-fi movies, are created by laser light capturing a detailed visual rendition of an object to be played back later.

There are significant setbacks faced when trying to install this technology into displays. The data required to perform such a task would be very difficult to contain in a usable model that could fit on a tabletop or be easily moved. The other basic adversity is the idea that the user can be looking at the unit from any number of angles, which would negatively effect how the image is displayed. This does not mean that holographic technology will never exist though; there are many companies researching its use in consumer products.